Inside the 1.44 TB HBM3e NVIDIA HGX B200 AI Server by ASRock Rack

🧠 Inside the 1.44 TB HBM3e NVIDIA HGX B200 AI Server by ASRock Rack

Slug (URL): /nvidia-hgx-b200-asrock-rack-ai-server/

Introduction

The world of Artificial Intelligence (AI) is advancing faster than ever — and with it, the need for extreme computing power.

Enter the NVIDIA HGX B200 AI Server by ASRock Rack, powered by a massive 1.44 TB of HBM3e memory.

This high-performance system is built to handle the largest AI models, data simulations, and deep-learning workloads.

⚙️ What Is the NVIDIA HGX B200 Platform?

The HGX B200 is NVIDIA’s flagship platform for AI and HPC (High-Performance Computing), part of the revolutionary Blackwell GPU architecture.

It integrates up to eight GPUs linked through NVLink Switch System, allowing ultra-high-speed communication between processors and memory.

- 1.44 TB HBM3e memory

- NVLink 5.0 up to 1.8 TB/s bandwidth

- PCIe Gen5 support

- Optimized for AI training, inference, and simulation

- Compatible with PyTorch and TensorFlow

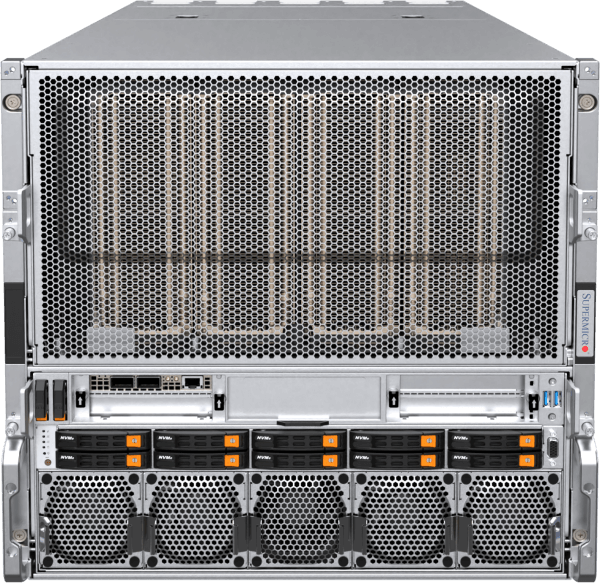

🧩 ASRock Rack’s Precision Engineering

ASRock Rack engineered a 4U rackmount chassis to host NVIDIA’s HGX B200 system.

It features next-generation airflow optimization, redundant power units, and support for air or liquid cooling for stable long-term operation.

- 4U rack design

- Dual Intel Xeon or AMD EPYC support

- PCIe Gen5 slots

- 8 GPUs interconnected via NVLink

- 3500 W redundant power supplies

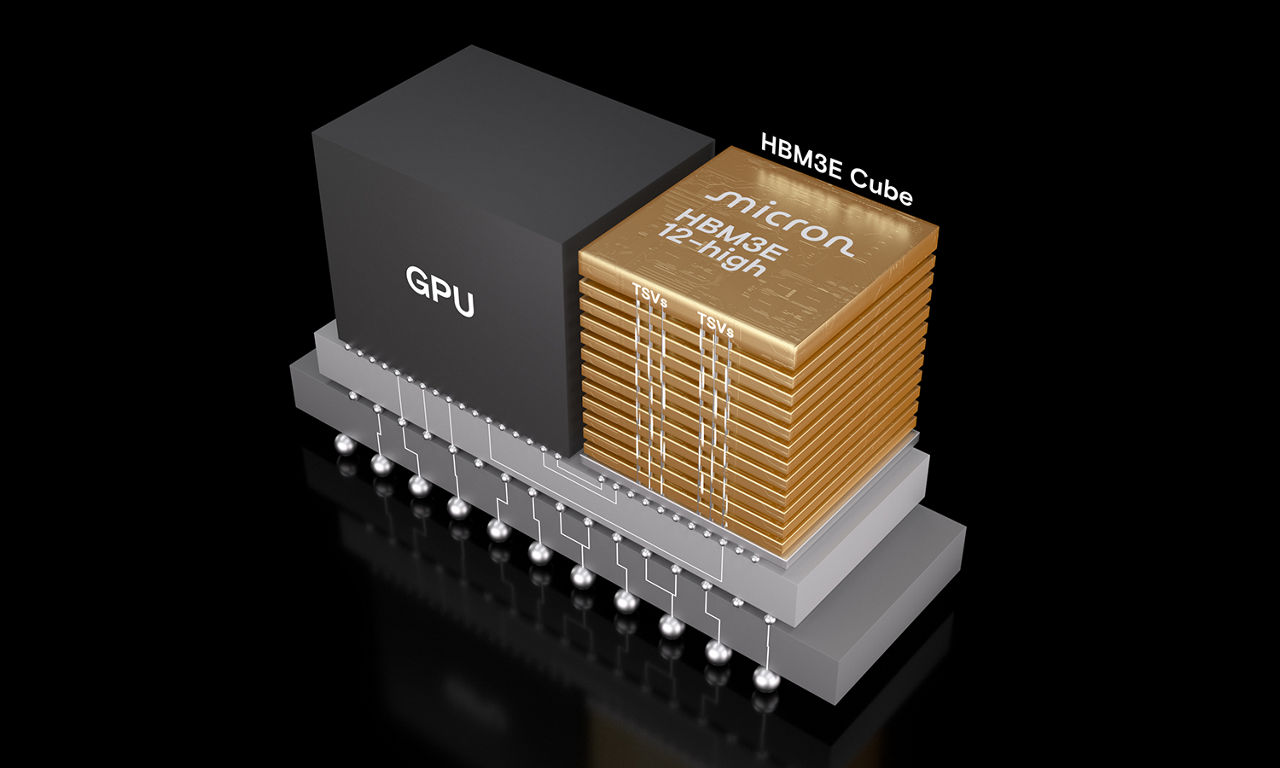

⚡ Why 1.44 TB of HBM3e Memory Matters

The HBM3e (High Bandwidth Memory 3e) standard brings massive improvements in throughput and efficiency — essential for modern AI systems.

This memory allows faster access to large datasets and complex model parameters, eliminating latency bottlenecks.

- Up to 50% higher bandwidth

- Improved energy efficiency

- Faster neural network training

- Ideal for multimodal AI and LLMs

🚀 Real-World Applications

The HGX B200 platform powers next-generation AI infrastructure worldwide. It’s ideal for:

- Large-scale AI model training (GPT-5, Gemini 2, LLaMA 3)

- Data analytics & scientific simulation

- Cloud AI services (AIaaS)

- Autonomous systems & robotics

- Enterprise machine learning platforms

⚠️ Challenges and Considerations

Even though the HGX B200 offers unmatched power, it comes with key challenges:

- High Cost: Over $200,000 for a fully equipped server

- Power Usage: ~10 kW per unit

- Advanced Cooling: Requires data-center grade airflow

- Infrastructure Needs: Specialized setup and management

🔮 The Future of AI Computing

The combination of NVIDIA’s Blackwell architecture and ASRock Rack’s server design represents a leap forward for AI computing.

These systems will drive the next era of real-time AI, scientific discovery, and digital automation.

🏁 Conclusion

The 1.44 TB HBM3e NVIDIA HGX B200 AI Server from ASRock Rack is more than just hardware — it’s a symbol of the AI revolution.

Its unprecedented memory, power efficiency, and scalability make it a cornerstone for the most demanding AI workloads in 2025 and beyond.

Tags

NVIDIA HGX B200, ASRock Rack AI Server, HBM3e Memory, AI Hardware 2025, Blackwell GPU, High Performance Computing, AI Server Technology

Post Comment